Why Functional Programming is (Still) Relatively Unpopular?

Table of Contents

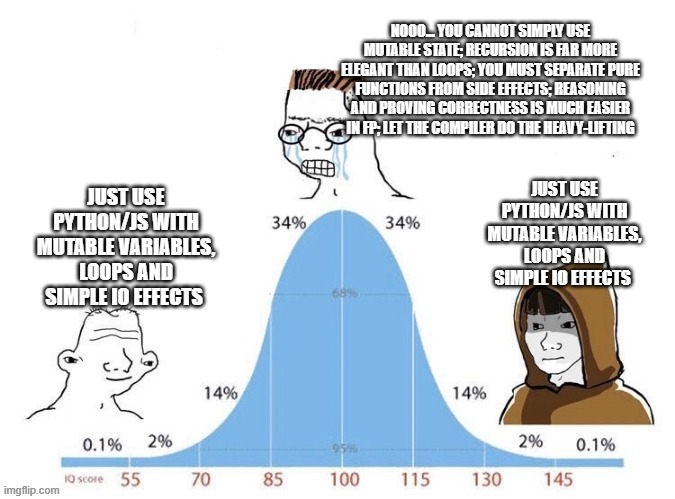

Some time ago I wrote a post arguing why every developer should know both functional and imperative programming. My position on this matter hasn’t fundamentally changed since then, but I do have spend some more time thinking about it. If both approaches are equally valid and, according to some, FP even has more advantages, why is it that there are still way less functional programmers? Let’s explore this further in this post.

Computational Equivalence and Irreducibility

Recently I’ve become somewhat acquainted with some of Stephen Wolfram’s ideas regarding computation as a new perspective on science (as a sidenote, I swear, this guy either is a genius decades ahead of his time, or he just found a cute meta-analogy for most of mathematics and physics). The two core principles in this view are: computational equivalence and computational irreducibility. Both of these ideas are not new by any stretch of imagination and have been known since at least the orignal works of Turing, but, according to Wolfram, they are much more fundamental than previously assumed. The principle of equivalence basically states that each known type of computation is equally expressive and powerful. By type of computation we not only mean classical definitions like Turing Machines or Lambda Calculus, but also any type of process occurring in the natural world, such as neuron firings inside our brains or air molecules forming the chaotic patterns of weather during a storm. The second principle concerns the inherent irreducible nature of computation. It states that, in general, you cannot reduce one complex computation to a different, simpler computation. You might call this non-sense, since seemingly the entire point of modern science is to try to come up with models which would simplify and abstract the complexities of the physical world, thus allowing us to make predictions about future events, without having to wait for these events to actually occur by themselves. The key word here is “in general”. The argument here is that it is indeed sometimes possible to come up with reductions in the form of mathematical equations like Newton’s laws of motion or Schroedinger’s wave function formula, but these reductions are only valid to a certain degree of correctness and should only be treated simple approximations. Moreover, the existance of these laws and our ability to discover them is only possible because we live in these “pockets of reducibility”, that is subregions of the entire space of possible computations which exhibit certain repeatable patterns. All these cool ideas are interesting by themselves and are certainly worth a post in the future, but let’s get back to our topic. The main conclusion from the principle of equivalence, so the idea that functional programming is in theory equivalent to imperative programming is kind of obvious at this point, so let’s just skip it and move to the more interesting conclusions from the second principle. One can often hear in certain communities that their compiler is super awesome since it can basically do all the heavy lifting for them: they only need to write the actual logic and if their code sucessfully passes the strict and sophisticated type-checking of the compiler then you can be almost certain that your program is indeed correct and does not contain at least certain classes of bugs. And to be frank, this viewpoint is in principle correct. We know from the Curry–Howard isomorphism that logical propositions indeed do correspond to computer programs. In principle you could write a logical proposition in the form of a function signature, implement a function corresponding to this signature, and if this function correctly type-checks that you proposition is indeed correct. This is the entire premise on which automated proof-checking is based upon (by the way, I think that Lean is absolutely awesome and will definitely write more about it in the future). In practice, however, things are not so easy. It’s actually quite difficult to properly map you business logic into type-checkable propositions and, more importantly, the principle of computational irreducibility tells us that in the end, the only absolute way of knowing whether your program is actually correct is to simply run it. What does it mean in practice? No matter how sophisticated your compiler is, how many static checks it performs, how much compile-time inspections it does, it still cannot guarantee 100% correct behaviour from an outside user perspective. So, what will? Tests. Tests which actually run your code. Okay, maybe tests will not guarantee correctness but they certainly can be useful to prove incorrectness (just like in science, you cannot prove a theory is valid, but a single experiment can easily disprove it). So in the end you always want to have a reasonable amount of tests, no matter if your program is written in Haskell or in C++. So since we should have as much tests as possible anyway, is it really worth it to increase the complexitiy of the codebase by adopting definitions straight from abstract algebra, which in turn would inevitably lead to longer compilation times, just to give you a possibly false sense of correctness? Maybe. But maybe not always. Some might argue that for most cases it’s not worth it. And if you want more static checks at build time, each language, even the most dynamic one, has usually many optional third-party static analyzers and type-checkers.

Algorithms in FP-land

This is an interesting issue. On one hand, when you think about algorithm design and analysis, you usually think of implementing them in an imperative language like C or Java. Using a functional language, like Haskell, which does not support mutability, makes some of the algorithms harder to implement than others. Some kinds of algorithms and data structures cannot be implemented without mutations at all (although you can usually simply design the application to not use them). Therefore, when using FP, you always have to create persistent data structures, that is in-memory structures which preserve their entire history of edits. This can be a drawback, since such implementation are usually harder and possibly require more memory. At the same time, using such immutable structures is very convenient in multi-threaded applications. The main conclusion here is that designing and implementing algorithms using pure FP can be tricky, but worthwhile in highly concurrent environments. For more info on how to handle data structures in a purely functional way I recommend “Purely Functional Data Structures”.

(Tail) Recursion and CPS

We know that each recursive program can be transformed to an iterative one. It might not be an particularly elegant or easy to understand program, but it can be theoretically done. This is nice, since it allows engineers to come up with an easy recursive algorithm to a problem and then almost mechanically tranform it to an iterative equivalent. In FP, you are of course encouraged to always use recursion instead of explicit looping structures. And since each recursive call incurs a time and memory cost in the form a call stack allocation, you are also encouraged to use certain tricks such as tail-recursion to alleviate this issue. The natural question is: is each recursive program always transformable to an equivalent program with only tail calls? Yes, it is, using for example a technique known as Continuation-Passing-Style. In this approach you explicitly pass the state of the routine execution as a parameter. However, using CPS is not a silver bullet, it does help to easily implement certain kinds of algorithms, but it objectively makes the code less readable (it’s quite similar to GOTO in imperative programming or callback hell in event-loop environments like early JS).

FP as a Gauge of Problem Solving Skills

A famous person once said that “you have to be this tall to use functional programming in production”. In other words, presumably functional programming is inherently more difficult than simple imperative programming. Therefore, if you want to hire the absolute best software engineers in the whole industry, you should hire functional programmers. FP is popular in fintech companies, since in these environment managment has this somewhat weird notion that simply hirigh FP talent will automatically lead to better quality code. To a certain degree, I kinda agree with this argument and see where is it coming from, but at the same time you don’t see Google or Facebook include Haskell questions in their interview processes, moreover, AFAIK, FP is very rarely used in these big tech companies. I think that I agree with them in this regard: asking a few relatively easy math questions (probability, combinatorics, algebra) and 2-3 relatively hard algorithms questions is a far better way of measuring how good a given candidate is in general problem solving than asking them what is a difference between a monoid and a semi-group, or what is a lens.

“FP is a scam invented by software consultancy companies to sell you more software consultancy”

Okay, this paragraph is probably only a meme (or is it?) but I do find it funny that there are a lot of companies whose entire business model is based on providing solutions for other companies which embraced functional programming, it’s not like their sole existence is premised on popularizing FP in every conceivable enrivonrment, while simultaneously consciously concealling the many drawbacks and added complexities incurred when adopting FP systems. Surely they wouldn’t dare to overhype this approach to large-scale software development of complex systems, praising its (questionable?) benefits, while also knowing full well that in practice FP can lead to worse long term maintainability and capacity to evolve, right? Right??

And yes, I know that FP has a rich and long history in many branches of theoretical computer science.

*puts down tinfoil hat*

The Curious Case of Cardano

One particularly interesting example of recent FP adoption in a large project is definitely Cardano. I’m glad that they embraced FP in this project, since it will definitely lead to more people learning about FP, however, I also think that an important reason as to why Haskell and Scala were chosen as the languages of choice was because the founder, Charles Hoskinons, is a known enthusiast of maths, formal methods and, by extension, FP. Personally, I think this was kind of a mistake, since writing an entirely new, open platform in a (still) relatively exotic and somewhat obscure language will inevitably detract a large portion of potential developers interested in building on top of it in the future. On the other hand, maybe using Haskell and more formal methods of software development will ultimatelly save them from a future $100M hack, which already happened in some crypto projects. Only time will tell. It certainly is interesting to watch a project of this scale and innovation level be created using mostly functional and formal methods.