How I Learned to Stop Worrying and Love the Manifold

Table of Contents

When I was in high school, I was really into physics. Later, even though I chose to purse a degree in Computer Science, my love for physics did not go away. Quite the opposite, my math classes at uni allowed me to finally go deeper into more advanced physics topics (till then I had really only read the first tomes of Feynman’s lecture textbooks, GR and QM was beyond my skills at that period). In spare time, I watched Susskind’s lectures on YT, read more books, and things were in general going smoothly. And then came General Relativity and QFT - tensors, curvatures, gauge theory, etc. I noticed that my math skills again were lacking. I tried to learn differential goemetry on my own (shoutout to an awesome channel teaching this subject - XylyXylyX), but had to stop because other stuff was more imporant (BSc thesis, MSc exam, internship).

Fast forward a about 1 year: during my quantum computing classes in my Master’s program I was participating in a research project in which our goal was to improve the performance of a famous quatum machine learning optimization method - Quantum Natural Gradient. It was an awesome project, definitely learned a lot, however the same math topics showed up (covariant derivatives, differential forms, curvature tensors, etc.) and I was yet again forced to admit to myself that I did not fully understand some of the aspects to a satisfying level. Irritating, but oh well.

During my MSc degree, I took classes in machine learning, image recognition, NLP and DL. I was (and still am) really enjoying ML, however, gradually I’m starting to notice that more and more arXiv papers decide to use some advanced but still familiar math. In general, one can observe that an increasing number of concepts from theoretical physics becomes applicable in modern ML - tensors, gauge equivariance, non-Euclidean manifolds, among others. A new, differential geometric formulation of neural networks theory is growing in popularity. In fact, even the official keynote presentation of one of the most important conferences in ML this year was focused on Geometric Deep Learning (link). In my MSc thesis, I was conducting experiments into the geometrical structures of loss landscapes in deep nets. At that point, it became clear to me - if you want to go deep into modern ML, you need to know differential geometry. It is the future, period. I think that next-gen Machine Learning degrees in the coming years really need to incorporate this branch of mathematics into their curriculum. ML is no longer only about calculus, algebra and probability.

Finally, a couple of months ago, I came across Eric Weinstein’s Gauge Theory of Economics. This was probably the tipping point for me - I decided it’s time to finally dive deep into differential geometry (+ a bit of group theory). For real this time.

After remebering that my calculus classes ended with an introduction to topology, I decided to start this journey with Lee’s classical “trilogy”: Introduction to Topological Manifolds, Introduction to Smooth Manifolds and Introduction to Riemannian Manifolds.

Currently, I’m still in book 1 but I’m already seeing that this investment is paying off (in future, I’ll try to regularly post updates on some intesting topics, especially in regard to their applicability in ML).

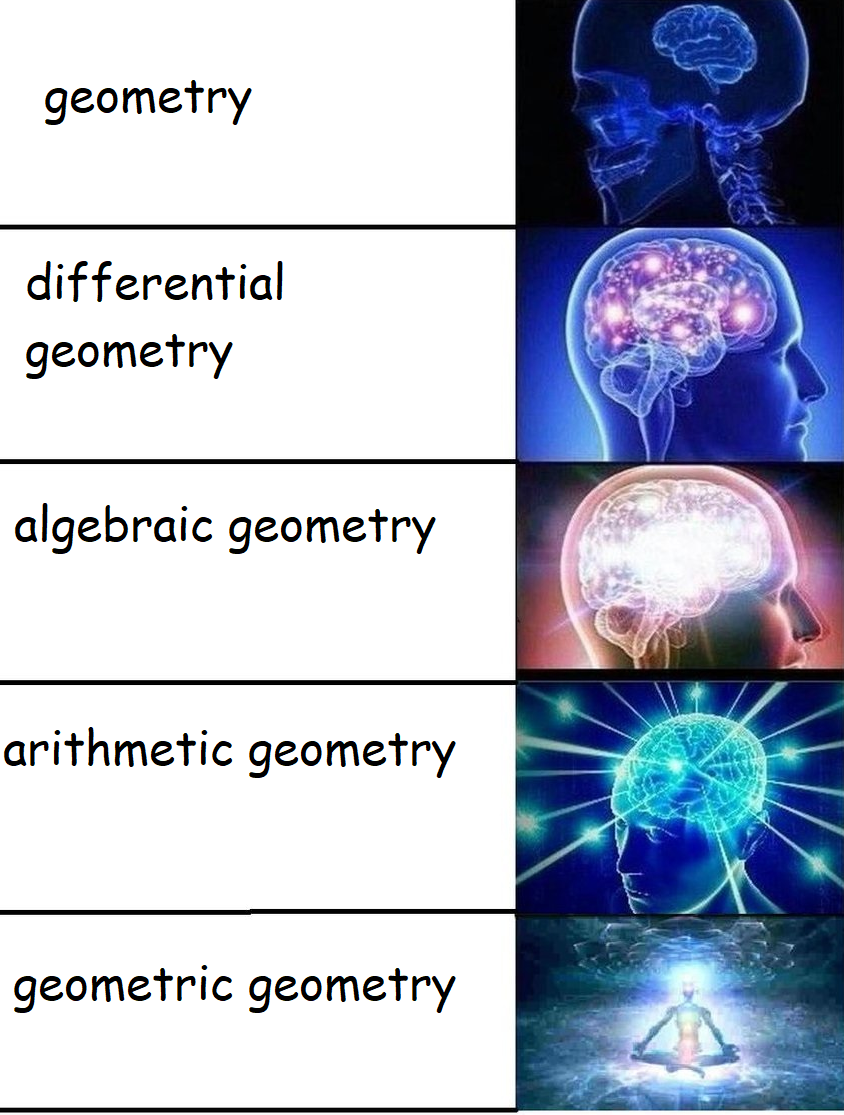

In summary, what I’m trying to say in this awkwardly personal post is that (differential) geometry is often not getting enough credit and love. For most of us, we learn about Euclidean geometry in high school and that’s basically it. And even thought most of us also continue to learn in college about calculus and topology (which are the two practical requirements for DG), we stop there. And that sucks. For it is differential geometry which gave us general relativity, quantum field theory and the standard model. According to some (specifically, descendants of Albert Einstein’s school of geometric physics) it is probably geometry (and not string theory) that will lead to further theoretical unifications in physics. It has its applicability in engineering, computer graphics and even economics. Finally, it’s now more and more clear that it will also play a fundamental role in the advancements of machine learnig in the coming years. So, if you’re a nerd like me, who likes physics, math and machine learning, I’d say you can’t go wrong with learnig more about this awesome subject.